-

Notifications

You must be signed in to change notification settings - Fork 2

Move LSTGeometry repo into ESProducer and standalone binary #203

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

base: master

Are you sure you want to change the base?

Conversation

|

I expect that the final code will not use a csv file to generate the geometry bin/txt file. |

| std::vector<Polygon> difference; | ||

| for (auto &ref_polygon_piece : ref_polygon) { | ||

| std::vector<Polygon> tmp_difference; | ||

| boost::geometry::difference(ref_polygon_piece, tar_polygon, tmp_difference); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@slava77 currently the bottleneck is with polygon operations like this one. I'm using boost::geometry, but maybe you have a suggestion for another library. Currently, I'm having some issues with the ordering of points ending up creating self-intersecting polygons or negative areas.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Is this really the slowest part? I would think that the straight line tracing should be the fastest.

is it going to be faster to add all polygons from list_of_detids_etaphi_layer_tar and then subtract them from ref_detid polygon?

here when considering new_tar_detids_to_be_considered and in the tar_detids_to_be_considered loop I'd expect that it would be much faster to place detids in eta-phi bins in each layer with some coarse binning (perhaps 32x32 or so) and then loop only over these eta-phi bins instead over all detids in a layer (access det_geom.getEndcapLayerDetIds(layer, etaphiBin) )

For the endcap, are the layers here for the positive and negative endcaps different or the same? If the same, then it sounds like an obvious speedup should be to skip the wrong side. The eta-phi binning will address this automatically

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I didn't mean this point in particular, just that the polygone operations through the code take up most of the time.

Yeah, binning sounds like the way to go. And for the endcap, I think the layer includes both, so that was another wasteful thing.

| double xnew = x * std::cos(-refphi) - y * std::sin(-refphi); | ||

| double ynew = x * std::sin(-refphi) + y * std::cos(-refphi); | ||

| x = xnew; | ||

| y = ynew; | ||

| } | ||

| double phi = std::atan2(y, x); | ||

| double eta = std::copysign(-std::log(std::tan(std::atan(std::sqrt(x * x + y * y) / std::abs(z)) / 2.)), z); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I'm not sure why this sincos rotation to xnew,ynew is done.

why is the phi here not simply atan2(y,x)-refphi ?

It would be even faster if phi for all corners is precomputed.

Also, eta doesn't depend on refphi, perhaps it's easier to precompute eta for all the corners and use the values directly (including the zshifts of 0, ±10).

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I'm not sure, I took it directly from here, but I'll think about it.

And yeah, pre-computing things would be good

| std::vector<Polygon> ref_polygon; | ||

| ref_polygon.push_back(getEtaPhiPolygon(det_geom.getCorners(ref_detid), refphi, zshift)); | ||

|

|

||

| // Check whether there is still significant non-zero area |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

it would help to add some more detailed description of what is done (it took me a while to stare at this code to figure out what's happening)

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I added a bit of a longer explanation above. Let me know if that's sufficient

|

|

||

| for (int zshift : {0, 10, -10}) { | ||

| std::vector<Polygon> ref_polygon; | ||

| ref_polygon.push_back(getEtaPhiPolygon(det_geom.getCorners(ref_detid), refphi, zshift)); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

We probably don't really need polygons (and boost). I'd treat modules as rectangles using the larger side (xyz rectangles are wider in phi on the lower r side) for the positive overlap tests and the narrower side for the subtractive overlaps.

The simplest subtractive overlap can be done by breaking a module in e.g. 10x10 bins and just brute force checking each bin if it's covered or not to get to the endcap modules not covered by barrel.

A more precise and perhaps faster is to collect (ordered) etas and phis from the target modules and bin the reference module in rectangles along these etas and phis.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yeah, it would be ideal to ditch polygons and just work with a simplified approximation. I'll work on this.

|

After binning, it takes about 15 seconds, which is starting to be reasonable. There are more improvements that can be made to make it faster, but for now I'll switch gears to get the CMSSW part in place. |

c2d0f53 to

a5accdb

Compare

|

Not sure if it will work, but let's see what happens. /run cmssw |

|

There was a problem while building and running with CMSSW. The logs can be found here. |

1 similar comment

|

There was a problem while building and running with CMSSW. The logs can be found here. |

|

/run cmssw |

|

There was a problem while building and running with CMSSW. The logs can be found here. |

|

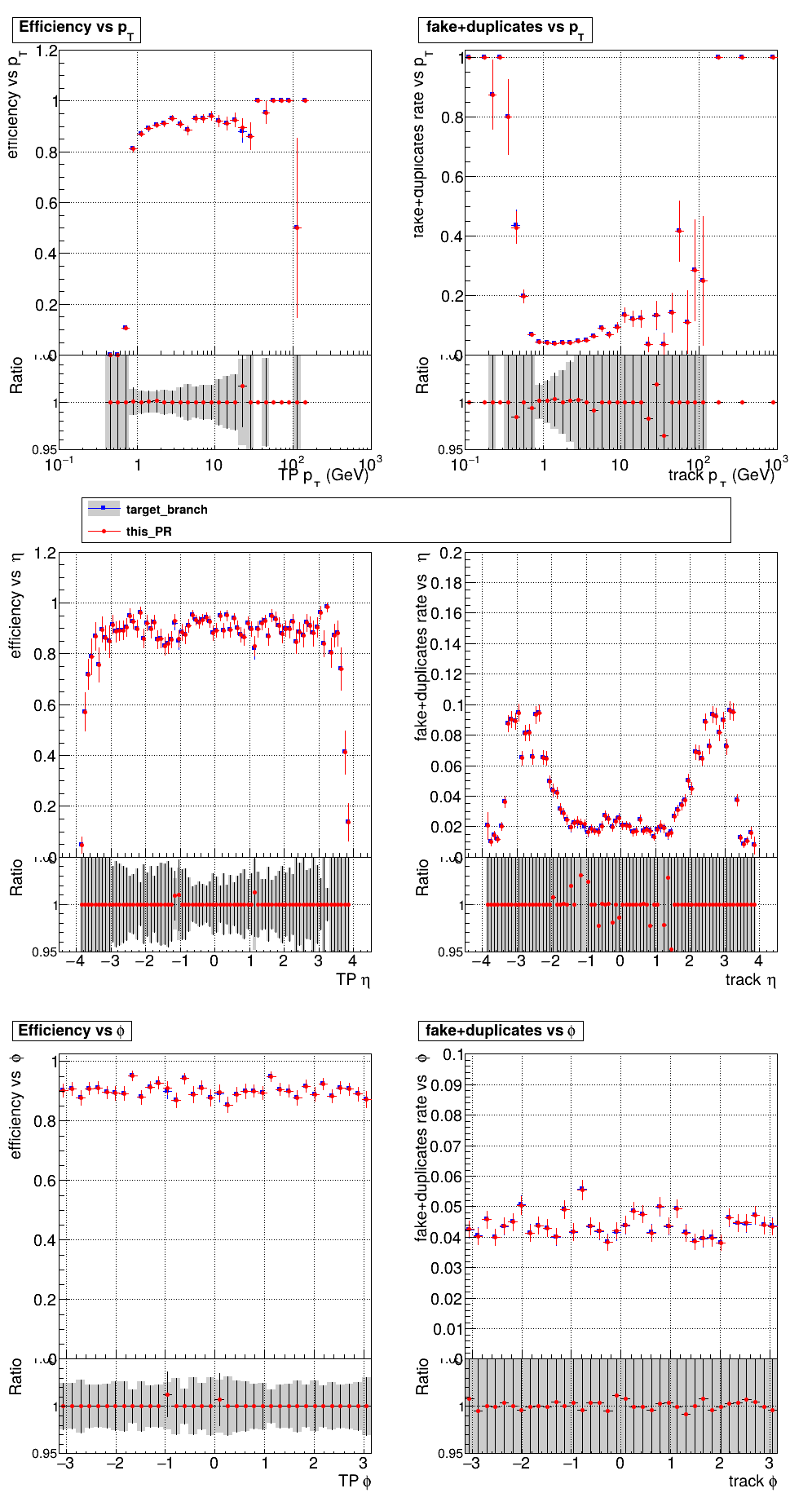

The PR was built and ran successfully with CMSSW. Here are some plots. OOTB All Tracks

The full set of validation and comparison plots can be found here. |

|

There was a problem while building and running with CMSSW. The logs can be found here. |

|

Hmm I'll have to make some tweaks to be able to test the low pt setup, so I'll wrap this up tomorrow. |

|

There was a problem while building and running with CMSSW. The logs can be found here. |

2 similar comments

|

There was a problem while building and running with CMSSW. The logs can be found here. |

|

There was a problem while building and running with CMSSW. The logs can be found here. |

|

/run gpu-cmssw lowpt |

|

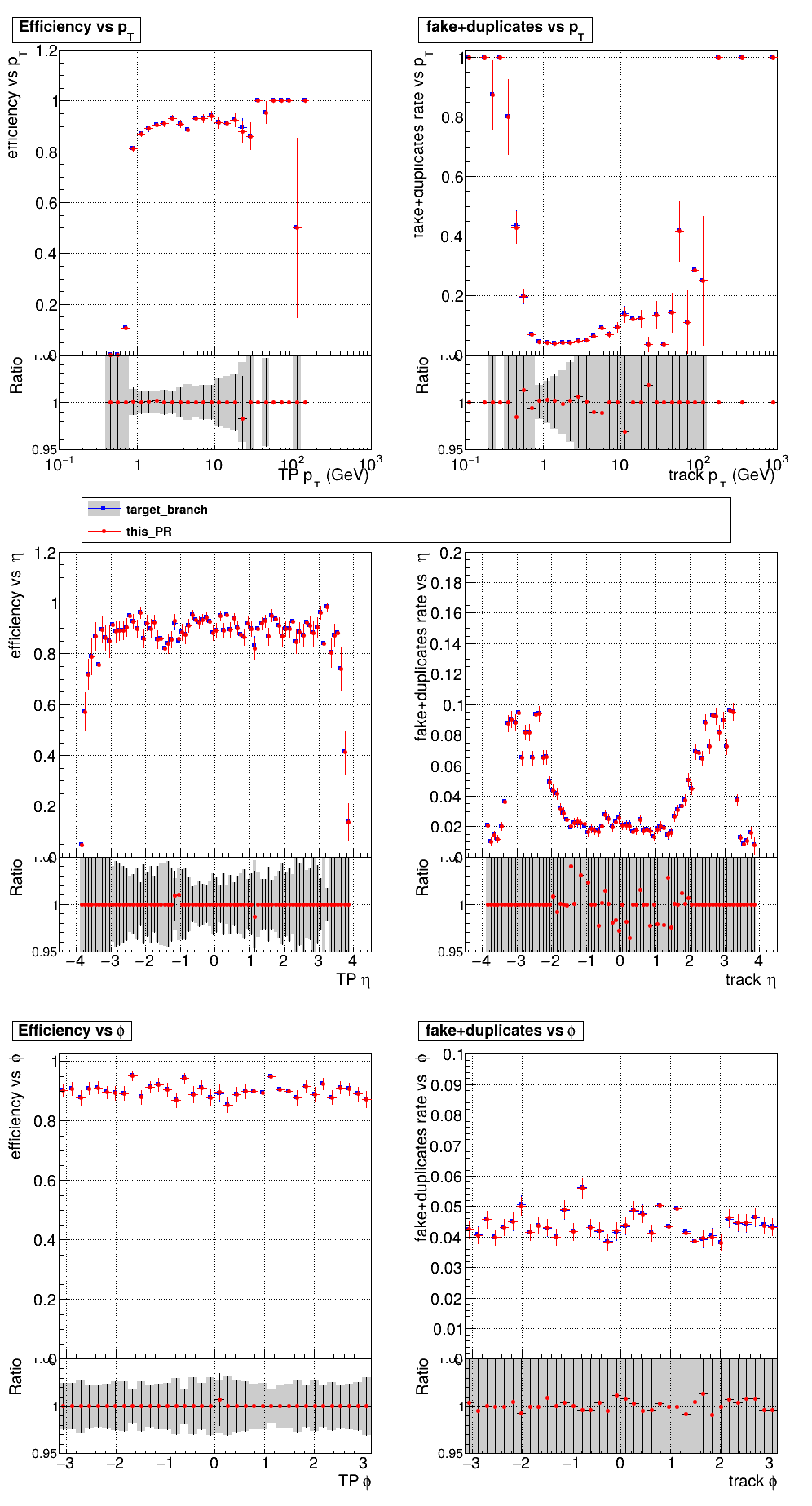

The PR was built and ran successfully with CMSSW (low pT setup) on GPU. Here are some plots. OOTB All Tracks

The full set of validation and comparison plots can be found here. |

|

@slava77 I don't think the lowpt setup is working properly. The plots look the same, so I'm wondering if it was even working before. These are the extra lines that the CI adds to the .py file: https://github.com/SegmentLinking/TrackLooper-actions/blob/cmssw/lowpt_mod.py . Do you know what might be missing? |

how is it applied? if [[ "$LOW_PT" == "true" ]]; then

sed '28r ../lowpt_mod.py' step3_RAW2DIGI_RECO_VALIDATION_DQM.py

fibut this just dumps the concatenated content of the two files to stdout, as you can see in the log perhaps |

|

Oops you're right. I missed the |

|

/run gpu-cmssw lowpt |

btw, why line 28, this seems very brittle: is it the end of the file or do you need to insert before something else? It would be more practical to add a customise function or even a procModifier in the main cmssw source code for the switch to 0.6 GeV, now that it's a multi-line effort. |

|

Yeah, it's very brittle. It would definitely be ideal to add a procModifier. I haven't done this before, but I'll look into how to do this. |

|

The PR was built and ran successfully with CMSSW (low pT setup) on GPU. Here are some plots. OOTB All Tracks

The full set of validation and comparison plots can be found here. |

|

/run gpu-cmssw lowpt |

|

There was a problem while building and running with CMSSW on GPU. The logs can be found here. |

|

/run gpu-cmssw lowpt |

|

There was a problem while building and running with CMSSW on GPU. The logs can be found here. |

slava77

left a comment

slava77

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I'm not sure why after the debugging is complete we need to support reading from the tkLayout csv files.

The cost is some awkward logic that has to pass via the fields defined by the csv files and code that redefines methods that already exist in CMSSW.

Can RecoTracker/LSTCore/interface/LSTGeometry become RecoTracker/LSTGeometry with mostly unconstrained dependencies on CMSSW except for the data format header-only files that are probably useful in the standalone

| setWhatProduced(this, ptCutLabel_); | ||

| : ESProducer(iConfig), ptCut_(iConfig.getParameter<double>("ptCut")) { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think we should still have a possibility to have multiple payloads in the same job with different thresholds.

| std::unique_ptr<LSTESData<alpaka_common::DevHost>> loadAndFillESHost(std::string& ptCutLabel); | ||

| std::unique_ptr<LSTESData<alpaka_common::DevHost>> loadAndFillESHost(lstgeometry::LSTGeometry const& lstg); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

perhaps rename; now that we have multiple payloads just having a generic method name is unclear. Also, load feels more relevant for loading from a file.

| std::unique_ptr<LSTESData<alpaka_common::DevHost>> loadAndFillESHost(std::string& ptCutLabel); | |

| std::unique_ptr<LSTESData<alpaka_common::DevHost>> loadAndFillESHost(lstgeometry::LSTGeometry const& lstg); | |

| std::unique_ptr<LSTESData<alpaka_common::DevHost>> loadAndFillESDataHost(std::string& ptCutLabel); | |

| std::unique_ptr<LSTESData<alpaka_common::DevHost>> fillESDataHost(lstgeometry::LSTGeometry const& lstg); |

| namespace lstgeometry { | ||

|

|

||

| struct LSTGeometry { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

mostly semantics, but still, lst::Geometry is just as clear, a complete repeat lstgeometry::LSTGeometry seems excessive; a global namespace LSTGeometry is also OK

| static unsigned short parseRod(unsigned int); | ||

| static unsigned short parseRing(unsigned int); | ||

| static unsigned short parseModule(unsigned int); | ||

| static unsigned short parseIsLower(unsigned int); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

perhaps move to bool

| static unsigned short parseIsLower(unsigned int); | |

| static unsigned bool parseIsLower(unsigned int); |

| const unsigned short& rod() const; | ||

| const unsigned short& ring() const; | ||

| const unsigned short& module() const; | ||

| const unsigned short& isLower() const; |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

| const unsigned short& isLower() const; | |

| const unsigned bool& isLower() const; |

or is there a reason to pretend it can have more values?

| namespace lstgeometry { | ||

|

|

||

| //Calculates the Rodrigues' rotation matrix for rotating a vector around an arbitrary axis. | ||

| MatrixD3x3 rodriguesRotationMatrix(ColVectorD3 axis, double theta) { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

this method is used only in L30, why not inline?

| const DetId detId = det->geographicalId(); | ||

|

|

||

| const auto &surface = det->surface(); | ||

| const Bounds *b = &(surface).bounds(); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

| const Bounds *b = &(surface).bounds(); | |

| const Bounds *bounds = &(surface).bounds(); |

| // Calculates the transformed corners of each sensor | ||

| void transformSensorCorners(ModuleInfo& moduleInfo) { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

isn't all of this simpler to be done with the CMSSW geometry objects (rotations and positions are known; here the code reduces to the minimalist ModuleInfo (probably from parameters used in the tkLayout csv file) to reconstitute what's already known

|

|

||

| auto detids_etaphi_layer_ref = det_geom.getDetIds([](const auto &x) { | ||

| auto mod = Module(x.first); | ||

| return ((mod.subdet() == 5 && mod.isLower() == 1 && mod.layer() != 6) || |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

need a comment to describe what this does (exclude the outermost modules that do not have connections to other modules

|

|

||

| namespace lstgeometry { | ||

|

|

||

| class Module { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think the bit coding details should just rely on CMSSW equivalent (unless I missed a point that this should remain working in standalone) so that this type is just a struct without parse* methods

This is still a work in progress, but I'm opening a draft PR so you can track the progress.

This is relatively close to working for standalone, but I still have to figure out the ESProducer part.